Tag: Lasso

- GhostKnockoffs: Only Summary Statistics

- Statistical Learning and Selective Inference

- Exact Post-Selection Inference for Sequential Regression Procedures

- Adaptive Ridge Estimate

-

Leave-one-out CV for Lasso

This note is for Homrighausen, D., & McDonald, D. J. (2013). Leave-one-out cross-validation is risk consistent for lasso. ArXiv:1206.6128 [Math, Stat].

- Bayesian Sparse Multiple Regression

-

Cross-Validation for High-Dimensional Ridge and Lasso

This note collects several references on the research of cross-validation.

-

Debiased Lasso

This post is based on Section 6.4 of Hastie, Trevor, Robert Tibshirani, and Martin Wainwright. “Statistical Learning with Sparsity,” 2016, 362.

-

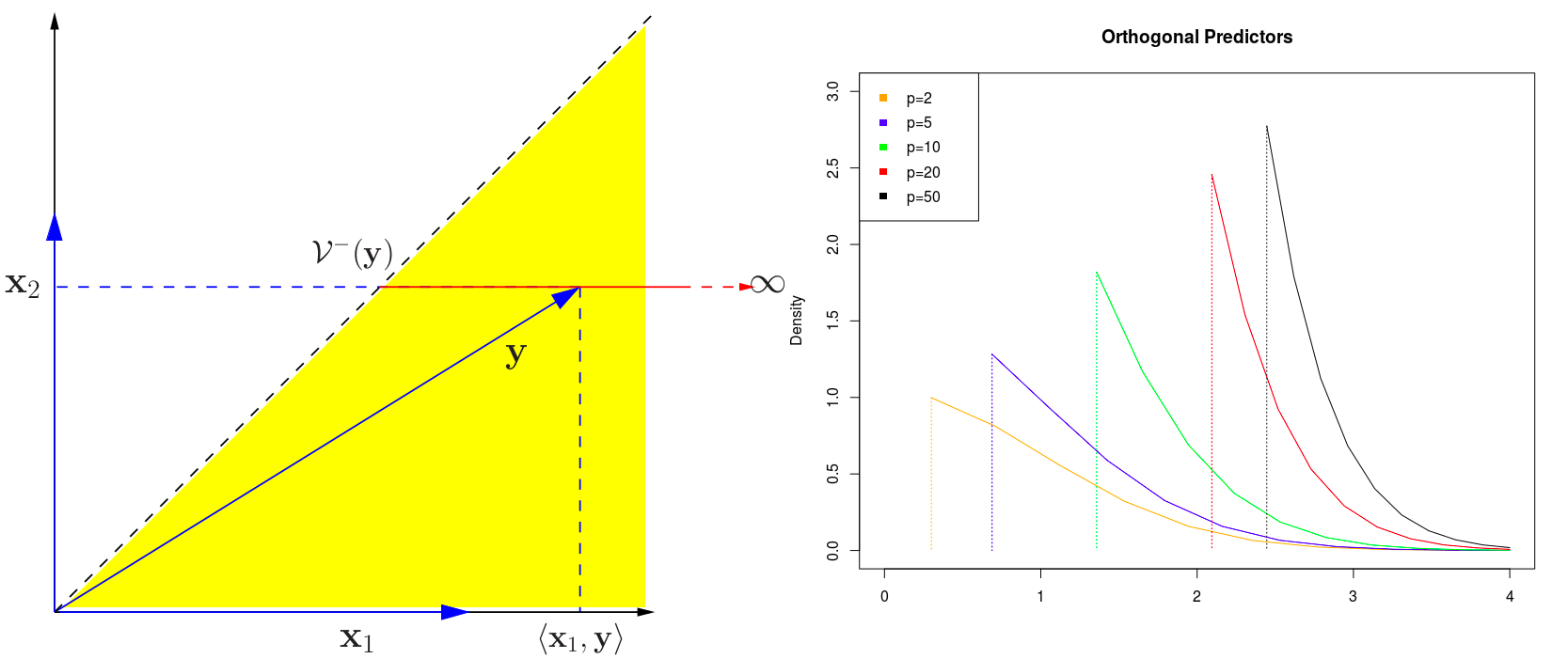

Least Squares for SIMs

In the last lecture of STAT 5030, Prof. Lin shared one of the results in the paper, Neykov, M., Liu, J. S., & Cai, T. (2016). L1-Regularized Least Squares for Support Recovery of High Dimensional Single Index Models with Gaussian Designs. Journal of Machine Learning Research, 17(87), 1–37., or say the start point for the paper—the following Lemma. Because it seems that the condition and the conclusion is completely same with Sliced Inverse Regression, except for a direct interpretation—the least square regression.

-

Statistical Inference for Lasso

This note is based on the Chapter 6 of Hastie, T., Tibshirani, R., & Wainwright, M. (2015). Statistical Learning with Sparsity. 362..

-

Theoretical Results of Lasso

Prof. Jon A. WELLNER introduced the application of a new multiplier inequality on lasso in the distinguish lecture, which reminds me that it is necessary to read more theoretical results of lasso, and so this is the post, which is based on Hastie, T., Tibshirani, R., & Wainwright, M. (2015). Statistical Learning with Sparsity. 362.

-

Presistency

The paper, Greenshtein and Ritov (2004), is recommended by Larry Wasserman in his post Consistency, Sparsistency and Presistency.