FARM-Test

Posted on

Abstract

- Background: large-scale multiple testing with correlated and heavy-tailed data

- Challenges:

- conventional methods for estimating the false discovery proportion (FDP) often ignore the effect of heavy-tailedness and the dependence structure among test statistics, and thus may lead to inefficient or even inconsistent estimation

- often impose the assumption of joint normality, which is too stringent for many applications

- Proposal:

- propose a factor-adjusted robust procedure for large-scale simultaneous inference with control of the false discovery proportion

- demonstrate that robust factor adjustments are important in both improving the power of the tests and controlling FDP

- byproduct: an exponential-type deviation inequality for a robust $U$-type covariance estimator under the spectral norm

- extensive numerical experiments demonstrate the advantage of the proposed method over several state-of-the-art methods especially when the data are generated from heavy-tailed distributions.

Introduction

- large-scale multiple testing problems with independent test statistics have been extensively explored, but correlation effects often exist across many observed test statistics.

- validity of standard multiple testing procedures have been studies under weak dependencies. For example, the B-H procedure or Storey’s procedure is still able to control the FDR or false discovery proportion when only weak dependencies are present.

- But multiple testing under general and strong dependence structures remains a challenge. Directly applying standard FDR controlling procedures developed for independent test statistics in this case can lead to inaccurate false discovery control and spurious outcomes.

- the paper focus on the case where the dependence structure can be characterized by latent factors, that is, there exist a few unobserved variables that correlate with the outcome.(other possible way to model the dependence) A multi-factor model is an effective tool for modeling dependence, which relies on the identification of a linear space of random vectors capturing the dependence structure of the date

- some authors assume a strict factor model with independent idiosyncratic errors, and use the EM algorithm to estimate the factor loading as well as the realized factors. The FDP is then estimated by subtracting out the realized common factors

- Fan et al. (2012) considered a general setting for estimating the FDP, where the test statistics follow a multivariate normal distribution with an arbitrary but known covariance structure.

- Fan and Han (2017) used POET estimator to estimate the unknown covariance matrix, and then proposed a fully data-driven estimate of the FDP.

- Wang et al. (2015) considered a more complex model with both observed primary variables and unobserved latent factors

- all the methods above assume joint normality of factors and noise, but normality is really an idealization of the complex random world.

- the distribution of the normalized gene expressions is often far from normal

- heavy-tailed data frequently appear in many other scientific fields, such as financial engineering and biomedical imaging

- in functional MRI studies, the parametric statistical methods failed to produce valid clusterwise inference, where the principal cause is that the spatial autocorrelation functions do not follow the assumed Gaussian shape.

- the paper investigate the problem of large-scale multiple testing under dependence via an approximate factor model, where the outcome variables are correlated with each other through latent factors. Four steps of the factor-adjusted robust multiple testing (FARM-Test) procedure

- consider an oracle factor-adjusted procedure given the knowledge of the factors and loadings

- using the idea of adaptive Huber regression, consider estimating the realized factors if the loadings were known and provide a robust control of the FDP.

- propose two robust covariance matrix estimators, a robust U-type covariance matrix estimator and another one based on elementwise truncation. Then apply spectral decomposition to these estimators and use principal factors to recover the factor loadings

- given a fully data-driven testing procedure based on sample splitting: use part of the data for loading construction and other part for simultaneous inference

- a numerical example: due to the existence of latent factors and heavy-tailed errors, there is a large overlap between sample means from the null and alternative, which makes it difficult to distinguish them from each other. With the help of either robustification or factor-adjustment, the null and alternative are better separated.

FARM-Test

- $\bX = (X_1,\ldots,X_p)’$: $p$ dimensional random vector with mean $\bmu = (\mu_1,\ldots,\mu_p)’$ and covariance matrix $\bSigma$.

- $\bX = \bmu + \B\bff + \bvarepsilon$

- $\B = (\bfb_1,\ldots,\bfb_p)’\in \bbR^{p\times K}$

- $\bff = (f_1,\ldots,f_K)’\in \bbR^K$

- Consider simultaneously testing the following hypotheses:

based on the observed data $\{\bX_i\}_{i=1}^n$.

- for each $1\le j\le p$, let $T_j$ be a generic test statistic for testing the individual hypothesis $H_{0j}$. The number of total discoveries $R(z)$ and the number of false discoveries $V(z)$ can be written as

- $R(z) = \sum_{j=1}^p1(\vert T_j\vert \ge z)$

- $V(z) = \sum_{j\in H_0}1(\vert T_j\vert \ge z)$

- $p_0=\vert H_0\vert = \sum_{j=1}^p1(\mu_j=0)$

- focus on controlling the false discovery proportion, $\mathrm{FDP}(z) = V(z)/R(z)$. Note that $R(z)$ is observable given the data, while $V(z)$ depends on the set of true nulls, is an unobserved random quantity that needs to be estimated.

- for any $1 \le j \le p$, with a robustification parameter $\tau_j >0$, consider the following adaptive and robust $M$-estimator of $\mu_j$ \(\hat \mu_j = \argmin_{\theta\in\bbR}\sum_{i=1}^n\ell_{\tau_j}(X_{ij}-\theta)\,,\) where the dependence of $\hat\mu_j$ on $\tau_j$ is suppresses for simplicity.

- $\hat \mu_j$’s can be regarded as robust versions of the sample averages $\bar X_j=\mu_j+\bfb_j’\bar \bff+\varepsilon_j$.

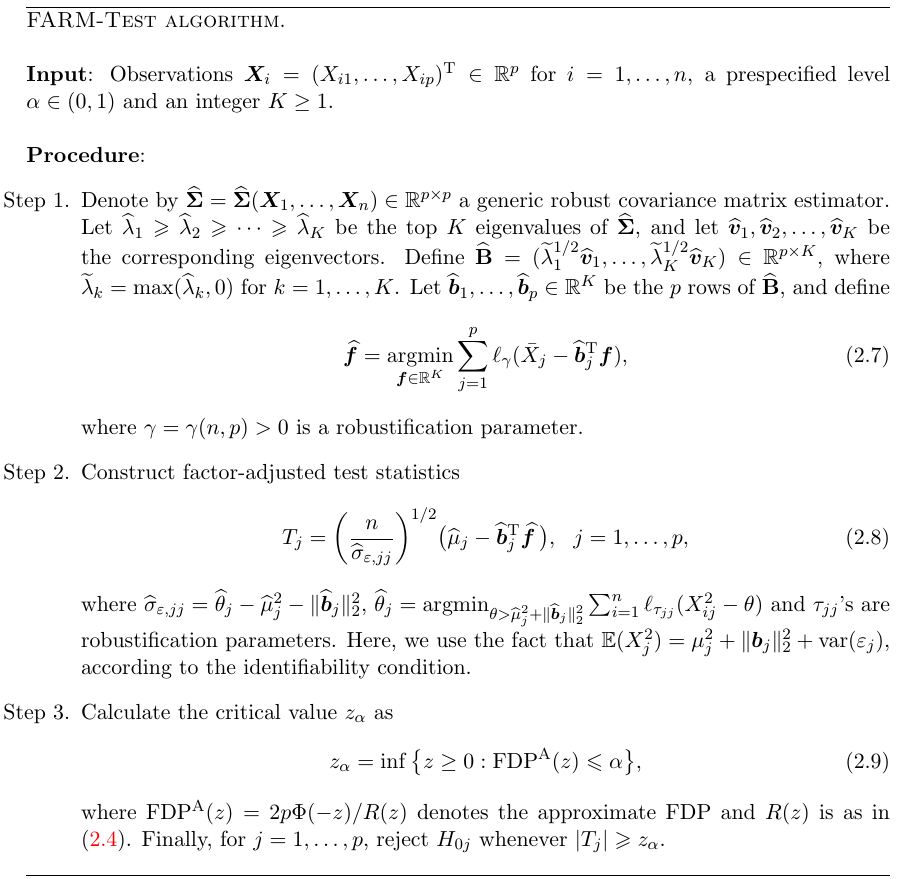

- given a prespecified level $\alpha\in(0,1)$, three steps for testing procedure:

- robust estimation of the loading vectors and factors

- construction of factor-adjusted marginal test statistics and their p-values

- computed the critical value or threshold level with the estimated FDP controlled at $\alpha$.

- In the high dimensional and sparse regime, where both $p$ and $p_0$ are large and $p_1=p-p_0=o(p)$ is relatively small, then $\mathrm{FDP}$ can be approximated by $\mathrm{FDP}^A=2p\Phi(-z)/R(z)$. But if the proportion $\pi_0=p_0/p$ is bounded from 1 as $p\rightarrow \infty$, $\mathrm{FDP}^A$ tends to overestimate the true FDP.

- The estimation of $\pi_0$ has been known as an interesting problem. A more adaptive method is to combine the above procedure with other’s method, such as Storey’s approach. For a predetermined $\eta\in[0,1)$, Storey (2002) suggested to estimate $\pi_0$ by

Then a modified estimate of $\mathrm{FDP}$ becomes

\(\mathrm{FDP}^A(z;\eta) = 2p\hat\pi_0(\eta)\Phi(-z)/R(z)\,, z\ge 0\)

- Sample splitting: To avoid mathematical challenges by the reuse of the sample. Split the data into two halves $\cX_1$ and $\cX_2$. Use $\cX_1$ to estimate $\bfb_1,\ldots,\bfb_p$. Proceed with the remain steps in the FARM-Test. (what if no sample splitting, or other methods to avoid this problem to use full information in the data, such as some procedures like cross validation?)

Simulations

- FARM-H

- FRAM-U

- FAM: non-robust factor-adjusted procedure

- PFA: principal factor approximation

- Naive: completely ignore the factor dependence.

(what if the method BH which can handle weak dependence)