Multiple human tracking with RGB-D data

Posted on

This note is based on the survey paper Camplani, M., Paiement, A., Mirmehdi, M., Damen, D., Hannuna, S., Burghardt, T., & Tao, L. (2016). Multiple human tracking in RGB-depth data: A survey. IET Computer Vision, 11(4), 265–285.

Abstract

Multiple human tracking (MHT) is a fundamental task in many computer vision applications.

Appearance-based approaches, primarily formulated on RGB data, are constrained and affected by problems arising from occlusions and/or illumination variations.

In recent years, the arrival of cheap RGB-depth devices has led to many new approaches to MHT, and many of these integrate colour and depth cues to improve each and every stage of the process.

The paper presents the common processing pipeline of these methods and review their methodology based on

- how they implement this pipeline

- what role depth plays within each stage of it

The paper also presents a brief comparative evaluation of the performance of those works that applied their methods to these datasets.

Introduction

Human tracking is a key component in many computer vision applications, including

- video surveillance

- smart environment

- assisted living

- advanced driver assistance systems (ADAS)

- sport analysis

They are usually centred around RGB sensors and are characterized by a variety of limitations, such as occlusions due to cluttered or crowded scenes and varying illumination conditions.

The vast literature landscape in this research area has widened even further in the last few years, due to the introduction and popularity of low-cost RGB-depth cameras. This has enabled the development of new algorithms that integrate depth and colour cues to improve detection and tracking systems.

Aim: summarise and focus on the area of multiple human tracking (MHT) from the combination of colour (RGB) and depth (D) data.

Not review methods based only on RGB features, but refer to the reviews by

- Dollar et al. on colour-based pedestrian detection

- Luo et al. for colour-based multi-object tracking

Four main compute vision topics could benefit from depth information

- human activity analysis and recognition

- hand gesture analysis

- three-dimensional (3D) mapping

- object detection and tracking

Benefits & limitations:

- advantage:

- The effect of occlusions can be reduced by using the 3D information contained in depth data, or more reliable features can be extracted in scenes undergoing illumination variations since such variations have low impact on depth sensors.

- depth can be used to extract a richer description of the scene allowing to simplify the tracking problem.

- limitation:

- certain depth sensor characteristic reduce the reliability of depth data in some operating conditions, e.g., in outdoor scenarios.

- colour and depth data can be used significantly complementary, and hence their efficient combination and processing can dramatically reduce the effect of the problem that affect them individually.

The paper provide a review of the state-of-the-art on MHT algorithms that integrate depth and colour data, characterising them based on

- trajectory representation and matching

- how they exploit depth information to improve various stages of the processing pipeline.

Multiple people detection and tracking techniques in RGB-D data

- First present the processing pipeline that can be attributed to the greater set of works in the literature

- then characterise the works based on

- which trajectory representation is used and its matching

- how and for which purpose depth data is exploited.

In MHT, detections of multiple people are normally aggregated into independent tracks, one for each person, in order to establish their respective trajectories. Tracks may contain position, motion, and appearance descriptions.

- optional Regions of Interest (ROIs) selection stage: allow for the reduction of the search space.

- matching step: associate these new detections to the trajectories based on a matching strategy and a similarity measure, computed from position and, more often that not, appearance.

A variation to the common pipeline:

- the detection and matching stages may be directed by trajectories and their generic representation of a person. Thus, currently tracked people are directly detected at the position predicted by their trajectory representation’s motion model in a significantly reduced search space.

Two types of motion model:

- zero-velocity motion model: assume stationary position of the target

- describe their velocity, yielding a first order characterisation of their movements. [TO check…]

- higher oder motion models, such as one that includes the target’s acceleration

Static appearance model may be built from one or a few initial frames and remain fixed for the duration of the trajectories’ lifetime, while dynamic appearance models may be derived from all previous observations of the target or from a sliding window. Such models are updated as new observations become available, in order to account for varying appearances, due to different body orientation relative to the sensor or changing illumination conditions.

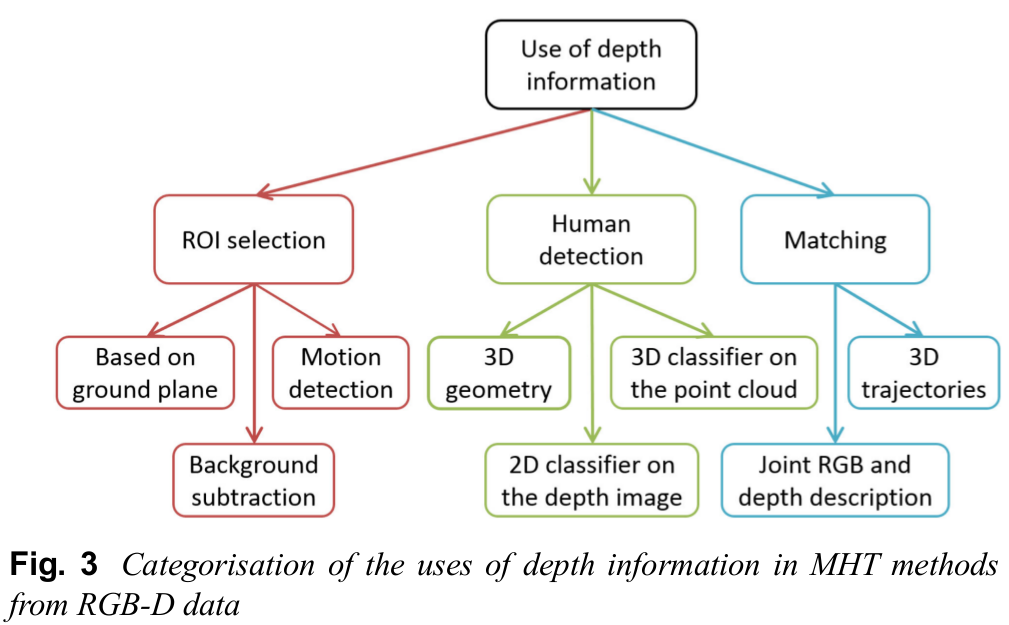

Depth data can be exploited to enhance RGB-based MHT.

The generic description of a person that drives the detection stage, is often made up of a number of RGB and depth cues. Possible generic person description from RGB data:

- Histogram of Oriented Gradients (HOG)

- poselet-based human detecor

- the deformable part-based model (DPM)

- Viola and Jones Adaboost

Survey by MHT pipeline implementation

crude motion model

Bansal et al.: zero-velocity motion model in the 2D image coordinates Salas and Tomasi: dynamically building a directional connected graph: a zero-velocity motion model and an appearance model made up of the colour signature … …

first order motion

the motion model determines target’s velocity approximately by the mean and variance of its depth variations in the past ten frames.

Bajracharya et al. assume same velocity, matching is performed by comparing candidates.

motion is modelled as the position and velocity of the tracked person from the previous frame

Galamakis et al. model motion as the target’s speed, computed between the last two frames, and use it to predict the next position of the target, assuming a constant velocity.

MuHyT: a tree of association hypothesis in a multi-hypothesis tracker framework, where matching probabilities for all past and current frames are computed from closeness to position and velocity predictions, and from appearance similarity. The MuHyT grows a hypothesis tree, pruned to the $k$-best hypotheses at each iteration in order to prevent exponential growth of the tree.

Munoz Salinaz et al. The matching stage finds the globally optimal associations of detected candidates to existing tracks using the Hungarian method. The matching likelihoods are computed from the distance to the predicted position and the similarity to the colour histogram appearance model.

Munaro et al. find the optimal assignment of detections to tracks in a Global Nearest Neighbour framework. Their matching likelihoods are obtained from the distance to the predicted position and velocity.

Almazan and Jones: mean-shift algorithm to find the ROI that best matches the appearance model of a target. Then with Kalman filter.

implement the first-order motion model in a particle filter framework. A potential drawback of this is that particles filters tends to be computationally expensive and may require optimisations to achieve practical running times. Munoz Salinas et al.: one particle filter is used per track, using a constant speed model to predict the next location of the target, and new target observations are searched for by maximising a detection probability.

particle filtering with RJMCMC sampling to track multiple people simultaneously, as well as non-human objects

TO connect Kalman filter.